Human Conversations with a Robotic Head

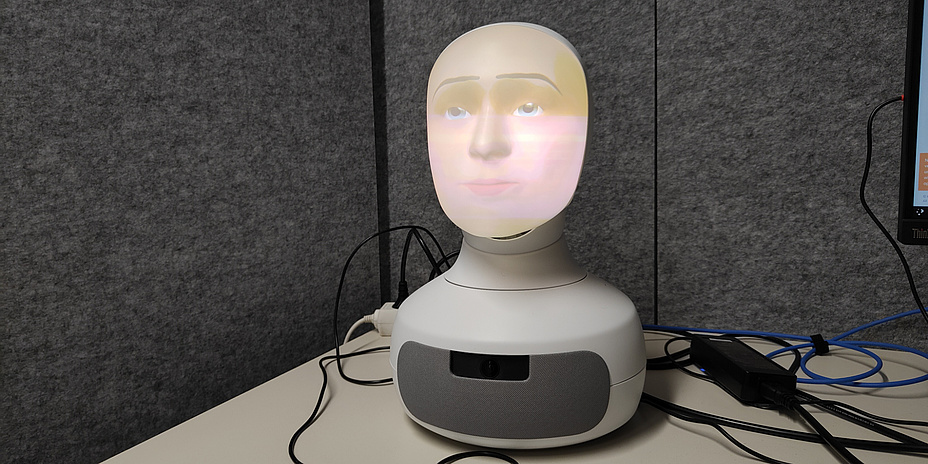

A concrete answer to the question asked, the right facial expressions and a natural-looking gaze directed at the person asking the question – even if the head on the table is clearly recognisable as a robot, it behaves in a strikingly human way. This is precisely one of the features that Furhat the social robot is supposed to possess. It was originally developed at the Royal Institute of Technology (KTH) in Sweden, but has since been turned into an independent company – Furhat Robotics. In addition to its commercial applications, for example as an airport guide, language teacher or unbiased recruiter, it is particularly in demand in the field of research – including at the Signal Processing and Speech Communication Laboratory at Graz University of Technology (TU Graz), which is closely linked to KTH via the university network Unite!

“The original idea behind Furhat was interaction: how do I talk to a robot, how does it respond? With these initial ideas, the inventors wanted to test scientific hypotheses,” explains Barbara Schuppler from the Institute of Signal Processing and Speech Communication. Research with Furhat is now going in various directions. The individual components such as facial expressions, gestures, intonation and around 200 different language models for around 40 languages and some regional dialects not only offer many opportunities for research into human-robot interaction, but also in speech science and speech recognition, in educational support and in the therapeutic field.

Recognising human signals

A major focus is on the robot recognising the human signals of the other person in order to deduce when it is time to speak. “It’s about communicating together. Furhat looks at the person who is speaking, and when it is talking to two people, for example, it switches its gaze back and forth. It is very attentive. It is now possible to adapt its emotions to the context of the conversation,” says Barbara Schuppler. The feedback from Furhat while the other person is speaking is also part of the communication and the subject of Michael Paierl’s thesis. He wants to teach the robot to say “hmm” or “mhm” or something similar with the right timing and with the right intonation to make the conversation become even more natural.

Even if we often don’t realise it, people feel the need to receive some kind of feedback when they are saying something – even if they don’t expect a response at the time. They just want to know that they are being understood. Especially in the area of educational support, when certain content is developed together with the social robot, such appropriate intermediate reactions are important. “Let’s assume the robot asks how the gravitational field works and the pupil begins to explain. You need a system that provides feedback during the explanation,” says Michael Paierl.

From details to an entire facial expression

To implement this system, he examines video recordings of people talking to each other in order to extract the acoustic properties, analyse them and create a model so that Furhat can learn this type of interaction. When Furhat itself says something, it is already possible to follow its speech intention and the context of its facial expression since a lot of research has been done on its facial expressions at KTH and Furhat Robotics. To do this, the developers scrutinised various relevant detailed areas of the face – such as the edges of the eyebrows, lips and cheeks – and attached reflective dots to them in order to examine their changes in position during various conversations.

“The eyebrows and the entire area of the eyes in particular are very much in tune with the speech melody when we ask a question, pause and think, become serious or loud. This is all reflected in the eyes at the top,” says Barbara Schuppler. When it comes to what is being said, the mouth area is the relevant facial expression area. And so there are different levels of communication, which are reflected in different areas of the face and which Furhat is supposed to visualise as realistically as possible.

A face made of several layers

This visualisation works via a projector built into the back of the head and projects the facial features onto the semi-transparent face. It works over several levels or layers. The rearmost layer is the face itself; eyes, eyebrows, lips, cheeks and forehead have their own layers. In contrast to robots whose facial expressions are controlled by small motors under a flexible facial surface, Furhat’s expression is much more dynamic and natural. This means that the eyes do not stare into space, but can really look at the person opposite thanks to facial recognition, and they remain in constant motion – just like human eyes.

There is one restriction that you still have to deal with when talking to Furhat. Speech recognition and speech synthesis have been outsourced to big providers such as Google or Amazon due to the hardware required for this. This expands the voice and language repertoire, but despite the large hardware capacities, an answer comes with a somewhat unnatural delay. If a large language model is additionally running in the background to increase the range of conversation topics, it may take a few seconds for Furhat to respond. To avoid this, the subject area that can be discussed is programmed locally in advance and Furhat’s response options are limited to this.

Dialect as a stumbling block

This makes it easy to use as a dialogue partner for specific topics, but a spontaneous chat about anything and everything will have to wait a little bit longer – at least if it is to be a conversation with a robot that feels like a conversation with a human. And if you talk to Furhat in an Austrian dialect, you might also encounter a few difficulties despite the limited subject area. Standard German and English deliver the best results, while speech recognition reaches its limits with dialects with small databases, such as numerous Austrian variants. However, Barbara Schuppler is also doing research into improving speech recognition despite limited data available for Austrian German. Perhaps this will benefit Furhat in the future.

Would you like to receive the latest stories, news, research stories, interviews or blog posts from TU Graz directly on your smartphone or in your email inbox? Subscribe to the TU Graz Telegram newsletter free of charge.

Kontakt

Barbara SCHUPPLER

Ass.Prof. Mag.rer.nat. Dr.

TU Graz | Institute of Signal Processing and Speech Communication

Phone: +43 316 873 4366

b.schuppler@tugraz.at