When people and drones merge

The publication <link https: ieeexplore.ieee.org document _blank int-link-external external link in new>„Drone-Augmented Human Vision: Exocentric Control for Drones Exploring Hidden Areas“ was recently published in the Journal <link https: ieeexplore.ieee.org xpl _blank int-link-external external link in new>„IEEE Transactions on Visualization and Computer Graphic“.

Non-dangerous missions in dangerous situations

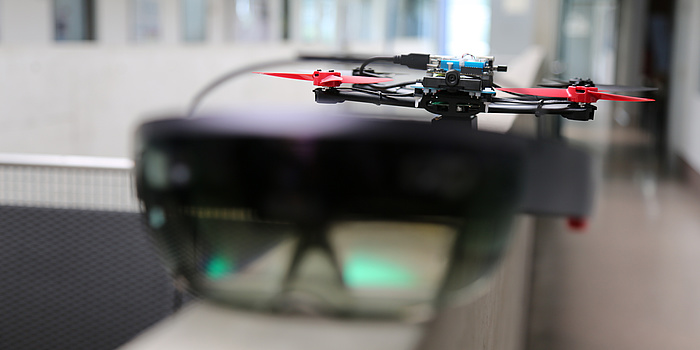

The newly developed system envisages first sending drones into the danger area to reconnoitre the situation. ‘This is of course not new. But our concept goes a step further. Navigating a drone using a joystick or similar in a narrow space riddled with obstacles is very difficult and requires quite a lot of skill. On top of this, it’s not even possible to control a drone which is out of the range of vision of the controller,’ explains Okan Erat. To solve the vision problem, footage from the drone’s camera is used to make a virtual image of the actual environment in which the user can immerse herself or himself by means of virtual reality glasses – in this case, a standard commercial HoloLens.

The two young researchers show how the drone system works in TU Graz’s ‘drone space’ in a <link https: www.youtube.com _blank int-link-external external link in new>demonstration video on YouTube.

Virtual or mixed reality

In their lab experiments, the researchers blocked out the entire field of vision of the test subject, thus completely immersing her/him in a virtual image of the world. Another possibility would be for users to superimpose additional information over reality in a semi-transparent way. ‘This would be as if I could look through a wall – like an X-ray image,’ explained Okan Erat. A conceivable scenario would be as follows: A wall has to be broken through in order to gain access to trapped persons. It is not clear to the rescuers whether there are dangerous tanks or power lines on the other side of the wall. With help from the drone images, precisely these potential hazards could be projected directly into the visual field of the drillers by means of a headset. The rescuers would then know where exactly a passage could be made safely. The drone was developed at TU Graz in the <link https: www.tugraz.at institute icg pages dronespace _blank int-link-external external link in new>‘droneSpace’ – a drone lab in Graz’s Inffeldgasse in the framework of the <link https: www.tugraz.at institute icg research team-schmalstieg research-projects ufo-users-flying-organizer _blank int-link-external external link in new>‘UFO – Users Flying Organizer’ project, which grapples with autonomous drone-aviation systems for indoor areas. ‘The drone was specially conceived for flying in narrow interior spaces and for interaction between human and machine. For this reason it’s very light, the propellers are made of soft material and the engines have comparatively low power,’ explains Alexander Isop, who designed the prototype and developed the framework for the autonomous aviation system. Together with Friedrich Fraundorfer, he offers courses in "droneSpace" for interested students.

Visions of the future

The drone currently used for research is very big; the visions for the future, however, are small. Very small, in fact: ‘I can imagine, for instance, that at some time drones will be as small as flies. They could then be sent out in swarms and broadcast many more extensive images of the danger zone. And they could work at very different places at the same time,’ explains Erat. This, too, is a new development of the TU Graz researchers. The user could simply switch between several drones and thus switch between different angles of view, for instance, going into different rooms very fast in order to gain an overall view of the situation. There is also plenty of room for new ideas when controlling the drone: "Development is heading in the direction that the user should be able to give ever simpler and more natural control commands, such as voice commands or gestures. The drone, on the other hand, must be able to solve increasingly complex tasks on its own. It is also important that the user always knows the status of the drone - so to speak, what the drone is thinking. This interaction between man and machine will continue to be a very interesting topic for me in the future," Isop adds. One example is that the drone can use a simple voice command to navigate through narrow passages or search for and recognize trapped persons. The visualisation also needs to be further developed: ‘I’m now going to concentrate on the visualisation and contextualisation. At the moment it’s only possible to visualise the actual display detail. But I’d like to create a kind of memory that can allow more space to be recognised – at least for a certain amount of time – so it can be reconstructed,’ explains Erat. But that’s just the first step: ‘Ultimately, we want to make the drone as smart as possible. Ultimately, it should know itself what the user wants from it. That’s the big picture,’ says the scientist, smiling.This research project is attributed to the Field of Expertise <link https: www.tugraz.at en research fields-of-expertise information-communication-computing overview-information-communication-computing _blank int-link-external external link in new>„Information, Communication & Computing“, one of TU Graz' five strategic areas of research.

Visit <link https: www.tugraz.at en tu-graz services news-stories planet-research all-articles _blank int-link-external external link in new>Planet research or more research related news.

Kontakt

M.Sc.

Institute of Computer Graphics and Vision

Inffeldgasse 16/II | 8010 Graz

Phone: +43 316 873 5032

<link http: _blank int-link-external external link in new>okan.erat@icg.tugraz.at Alexander ISOP

BSc MSc

Institute of Computer Graphics and Vision

Inffeldgasse 16/II | 8010 Graz

Phone: +43 316 873 5055<link int-link-mail window for sending>

isop@icg.tugraz.at