Through the Eyes of a Robot

The possibilities offered by the use of robots are becoming ever more extensive and spectacular. But one point remains essential: without human intervention before or during use, robots would just be rather expensive paperweights. Eduardo Veas from the Institute of Interactive Systems and Data Science at TU Graz deals with the interface between man and machine and, above all, user-friendly interaction with technology. Human-centred computing is the name of his research area and, as the name suggests, the focus is on the needs of users when using human-computer systems.

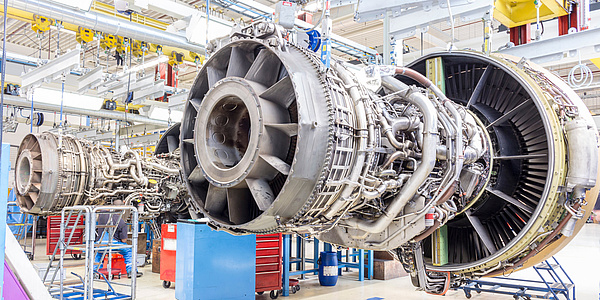

“We have telepresence and teleoperation, for example. The robot serves as an avatar for a person. In this context, the term avatar does not describe the purpose of the person being perceived as a robot, but rather that they should be able to interact with the environment through the robot,” explains Eduardo Veas. This can relate to different areas of application, such as the operation and maintenance of machines in hazardous environments or the exploration of unsecured caverns or shafts. To do this, the robot has to collect sensor data from its surroundings and act in the way the human wants it to act. This can be done by direct operation or autonomously, although the implementation of autonomy is not the central aspect for Eduardo Veas and his team in this area of research. For them, the focus is on presenting the information collected and interacting with the machine using virtual reality.

With the robot in the mine shaft using VR glasses

Together with the Montanuniversität Leoben, Eduardo Veas is currently working on a project in which a robot explores a mine shaft using numerous cameras and sensors. It not only records the surroundings and displays them in virtual reality, but also recognises whether there are gases in the shaft before people move into it. “In such an environment, there are many challenges into which research needs to be invested. In this real-life scenario, there is no light, no electricity and initially no communication. The best strategy for setting up the right infrastructure is the subject of research. Our work focuses on the visualisation of reconstructed sensor data. However, the reconstruction of the environment and interaction in VR still need to be researched further in order for them to function as desired. A large team has to work together to solve all these problems,” says Eduardo Veas.

First and foremost is the interaction with the robot, as everything else depends on it. Only when this works smoothly will it also be possible to follow the surroundings without any problems using VR. Because if the robot’s movements do not match the output in the VR glasses, it becomes an unpleasant experience for the wearer, since they will feel as if they were seasick. Once this has been mastered, environmental recognition can be improved in order to obtain the most realistic possible image of the environment and the prevailing conditions via the robot.

Control via speech

To take the interaction with the machine one step further, the researchers are also working on implementing voice control. With good environmental recognition in particular, the robot could then be steered more precisely to specific points, such as a spot on the floor or a point on the wall. For now, the team has used a connection to ChatGPT. The robot receives the instruction or a question via a speech-to-text module, sends the text to ChatGPT, where the instruction is converted to suit the robot or an answer is formulated, and finally the robot implements the instruction or issues the answer via a text-to-speech module.

“Implementing specific instructions in relation to the environment requires the robot to be able to perceive the environment accordingly. Without perception, there are only simple instructions, such as walk five metres forwards or turn a few degrees. If the perception of the environment is good, you can tell the robot to walk towards the boulder, look at the red spot on the wall or go into the rock niche,” says Eduardo Veas. To make the robots independent in this respect, the next step will be to replace ChatGPT with a language model that runs locally. It will then be able to see, hear and speak for humans and venture into dangerous environments while the user watches safely via VR goggles and utters instructions.

This research is anchored in the Field of Expertise "Information, Communication & Computing", one of five strategic foci of TU Graz.

Would you like to receive the latest stories, news, research stories, interviews or blog posts from TU Graz directly on your smartphone or in your email inbox? Subscribe to the TU Graz Telegram newsletter free of charge.

Kontakt

Eduardo VEAS

Univ.-Prof. Dr.techn. MSc

TU Graz | Institute of Interactive Systems and Data Science

Phone: +43 316 873 30858

eveas@tugraz.at