TAKEN: Semi-Supervised Multi-Label Whole Heart Segmentation

Suitable as: Master's Project / Thesis

Student currently working on this project: Elisabeth Rechberger

Deep learning boosted the state-of-the-art in many computer vision and medical imaging tasks. Unfortunately, deep convolutional neural networks (CNNs) require lots of data to be trained successfully and deliver good performance. Especially in the medical imaging domain, obtaining large annotated datasets is not only difficult due to financial and ethical reasons, but also due to demanding annotations from medical experts.

Semi-supervised learning is a way of alleviating the requirement of lots of annotations, by also using information from images, where no annotations are available [1]. The goal of this project is to apply semi-supervised learning techinques for semantic segmentation problems, specifically for multi-label whole heart segmentation [2]. For this project, the student has to do a literature overview of semi-supervised semantic segmentation using CNNs in the medical imaging domain and to implement and compare various semi-supervised learning methods, e.g., using generative adverserial networks (GANs) to regularize the predicted segmentations.

[1] Yang et al., Unsupervised Domain Adaptation for Automatic Estimation of Cardiothoracic Ratio, International Conference on Medical Image Computing and Computer-Assisted Intervention, 2018

[2] Payer et al., Multi-Label Whole Heart Segmentation Using CNNs and Anatomical Label Configurations, International Workshop on Statistical Atlases and Computational Models of the Heart, 2017

TAKEN: Detection of Infected Teeth in 3D CBCT Images

Suitable as: Bachelor's Thesis/Master's Project /Master's Thesis

Student currently working on this project: Arnela Hadzic

As a consequence of a bacterial infection, tooth associated infection is very common. Those pathologies are usually located in the surrounding of the root of the teeth. They can vary in diameter from a simple widening of the periodontal space up to several millimeters or more, being completely bone surrounded or perforating the adjacent anatomical borders. Furthermore, they potentially affect each of the around 30 roots per jaw. The manual location of those frequently requires a large amount of work, depending on the number of investigated teeth and the quality of the data set as well as on the education and experience of the doctor doing an examination. The aim of the project is to train deep convolutional neural networks (DCNN) to automatically recognize all the infected teeth in the 3D Cone Beam Computed Tomography (CBCT) image.

The project is in collaboration with Dr.med.dent. Dr. Barbara Kirnbauer, LKH Graz, Department of Dentistry and Oral Health.

[1] Payer et al., Integrating spatial configuration into heatmap regression based CNNs for landmark localization, Medical Image Analysis, 2019

TAKEN: Deep Active Learning for Semantic Segmentation

Suitable as: Master's Project / Thesis

Student currently working on this project: Johannes Franz Spöcklberger

The exponential growth of data contributed significantly to the success of Deep Learning in the last decade. While more data often leads to a better performance, there are practical limitations to consider. First, it can be infeasible to acquire additional data in a significant quantity. Second, annotating data is a laborious process that can quickly become very cost-intensive, especially when human experts are required. Last, some data samples can be detrimental to the overall performance of the model and are preferably excluded from training.

Active Learning (AL) mitigates these shortcomings by focusing the annotation effort solely on the most informative samples in an iterative learning procedure. To accomplish this, an AL system proposes a subset of data samples in an unsupervised manner and requests annotations from a human expert. This subset is then added to the annotated data pool and used to train a model to solve the given task. When training finishes, the trained model is used to propose another subset of samples to be annotated and the procedure starts over until the predefined total annotation effort of the human expert is reached [1].

The goal of this project is to apply Deep AL to solve semantic segmentation problems on cell images using measures like dropout as a query function to propose data samples for annotation.

[1] Yang et al., Suggestive Annotation: A Deep Active Learning Framework for Biomedical Image Segmentation, International Conference on Medical Image Computing and Computer-Assisted Intervention, 2017

FINISHED: Deep Reinforcement Learning in Medical Image Applications

Suitable as: Master's Project / Thesis

Student currently working on this project: Klemens Kasseroller

By learning a sequence of actions that maximize the expected reward, deep reinforcement learning (DRL) brought significant performance improvements in many areas including games, robotics, natural language processing, and computer vision. It was DeepMind, a small and little-known company in 2013, that achieved a breakthrough in the world of reinforcement learning as they implemented a system that could learn to play many classic Atari games with human or even superhuman performance. Sill, it was until recently that DRL started to appear also in medical image applications for landmark detection, automatic view planning from 3D MR images, or active breast lesion detection [1,2].

[1] Ghesu et al., Multi-Scale Deep Reinforcement Learning for Real-Time 3D-Landmark Detection in CT Scans, IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017

[2] Alansary et al., Automatic View Planning with Multi-scale Deep Reinforcement Learning Agents, MICCAI, Granada, Spain, 2018

FINISHED: Bayesian Neural Networks in Medical Image Applications

Suitable as: Master's Thesis

Student currently working on this project: Stefan Eggenreich

Project is combined with: Age estimation and growth prediction from MRI data of the knee

While standard deep convolution neural networks have recently shown unprecedented results that even go beyond human performance in computer vision tasks like classification, segmentation or detection, these methods are not capable of capturing model uncertainty. Being able to provide a prediction together with its uncertainty is of crucial importance for many medical applications that are related to decision making. Bayesian probability theory offers us mathematically grounded tools to reason about model uncertainty [1][2]. Therefore, Bayesian deep learning, as a field at the intersection between deep learning and Bayesian probability theory, has recently attracted great interest of both computer vision and medical image communities.

[1] Blundell et al., Weight Uncertainty in Neural Networks, ICML 2015

[2] Kendall et al., What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, NIPS, Long Beach, USA, 2017

The work in this student project has led to a publication at OAGM 2017, which received the best paper award, as well as a publication at OAGM 2018.

The work in this student project has led to a publication at OAGM 2017, which received the best paper award, as well as a publication at OAGM 2018.

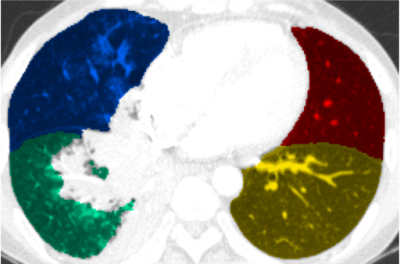

Over the last years we have developed a GPU based vascular tree extraction scheme, which can be used for segmenting the pulmonary vessel tree from dual energy thorax CT data. The LBI-LVR is interested in determining early signs of pulmonary hypertension by performing a CT scan of the lung vessels and analyzing the vessel structures of disease and healthy patients.

When analyzing measures that try to predict pulmonary hypertension (PH) from the vascular tree, an interesting question is if a global measure for the whole tree is sufficient, or if individual measures of the different lung lobes give a more sensitive and specific prediction result. Therefore, in this master project we aim at developing a lung lobe segmentation algorithm, based on [1], to apply the quantitative measures predicting PH on the individual lobes. Students interested in this project should have C++ programming skills. Our libraries make extensive use of CUDA based image processing, therefore interest or even knowledge in this direction would be beneficial.

[1] Lassen, B.; van Rikxoort, E.M. ; Schmidt, M. ; Kerkstra, S. ; van Ginneken, B. ; Kuhnigk, J.-M. Automatic Segmentation of the Pulmonary Lobes From Chest CT Scans Based on Fissures, Vessels, and Bronchi. IEEE TMI 2013

Over the last years we have developed a GPU based vascular tree extraction scheme, which can be used for segmenting the pulmonary vessel tree from dual energy thorax CT data. The LBI-LVR is interested in determining early signs of pulmonary hypertension by performing a CT scan of the lung vessels and analyzing the vessel structures of disease and healthy patients.

When analyzing measures that try to predict pulmonary hypertension (PH) from the vascular tree, an interesting question is if a global measure for the whole tree is sufficient, or if individual measures of the different lung lobes give a more sensitive and specific prediction result. Therefore, in this master project we aim at developing a lung lobe segmentation algorithm, based on [1], to apply the quantitative measures predicting PH on the individual lobes. Students interested in this project should have C++ programming skills. Our libraries make extensive use of CUDA based image processing, therefore interest or even knowledge in this direction would be beneficial.

[1] Lassen, B.; van Rikxoort, E.M. ; Schmidt, M. ; Kerkstra, S. ; van Ginneken, B. ; Kuhnigk, J.-M. Automatic Segmentation of the Pulmonary Lobes From Chest CT Scans Based on Fissures, Vessels, and Bronchi. IEEE TMI 2013