CAMERA DRONES LECTURE

Overview

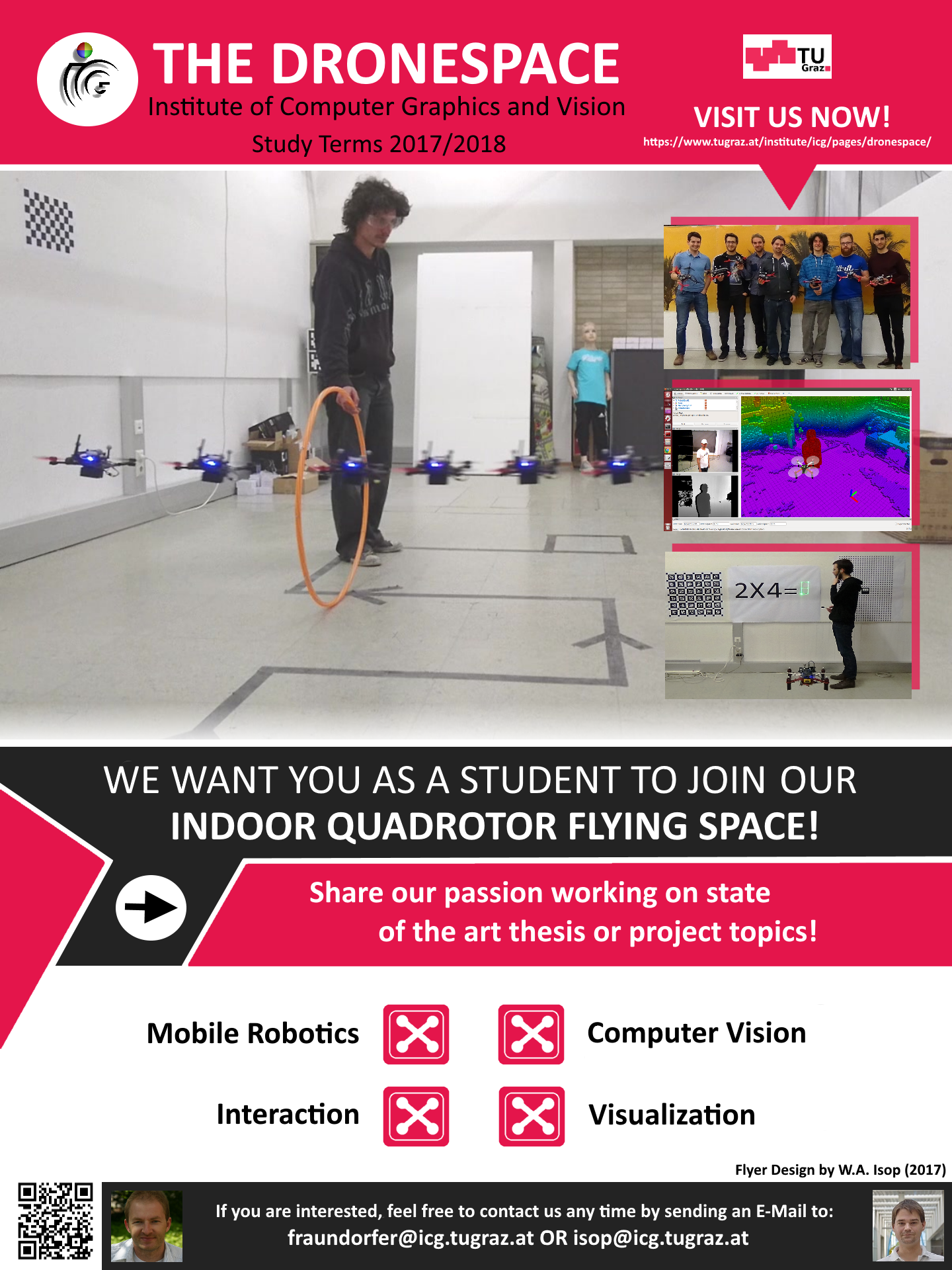

This lecture imparts knowledge about theoretical and practical aspects of setup, functioning, applications and guidelines when using camera drones.

The topics addressed include basics about quadrotor control, theoretical knowledge about control and navigation, camera based flight control (visual inertial navigation, obstacle avoidance, path planning and motion capture systems), computer vision combined with 3D-reconstruction, Planning of vision based flight flights with MAVs, legal and practical aspects for drone flights and common robotics frameworks (ROS).

The lecture consists of a practical part, in which students can gain knowledge about topics like interfacing a quadrotor with ROS, object detection, mapping and navigation. For this purpose students are using the autonomous MAV flight system, which is based on the Parrot Bebop 2. The framework is used to implement and test tasks, given to the students as part of the lecture. In the final phase, students can then test their implementation during flights in the ICG's indoor MAV flying arena, the "droneSpace". Since Winter Term 2017/2018, the practical part consists of an MAV rescue challenge. Participating students have to work on different task categories to find an artificial victim, which is placed inside the flying space.

Details about the rescue challenge are outlined in the following document:

Rescue Challenge Rules - Winter Term 2018/2019

The practical part is complemented with a written exam at the end of the semester. The course homepage can be found here.

Supervisors

![]()

![]()

Projects Of Winter Term 2017/2018

Indoor MAV Rescue Challenge

The goal of the lecture during winter term 2017/2018 was to let the students compete in an indoor rescue challenge. The students had to utilize the autonomous MAV flight system of the droneSpace to solve 3 different types of problems whereas the focus lied on:

- Marker Tracking - To identify objects of interest like hazardous areas or victims

- Path Planning and Exploration - To discover the environment and (semi)-autonomously navigate towards objects of interest

- Mapping - To create a 3D Map of the environment during exploration

Don't forget to check out our new videos from the last winter semester which you can find here as playlist or below!

GROUP 1

Description

Group 1 has to first let the MAV autonomously explore the close environment and then let a remote user decide where to explore next to finally find a potential victim. Compared to the other groups, Group 1 uses a PRM-planner to generate global path points for exploration.

Sensors Involved

Optitrack Motion Capture System, Orbec Astra RGBD Sensor

GROUP 2

Description

With Group 2 we seperated the challenge into two iterations. For the first iteration occupancy information is given and a remote user defines a goal close to where a victim is expected. For the second iteration Group 2 updates occupancy information online from the created Octomap and, based on that, generates navigation paths to explore the environment.

Sensors Involved

Optitrack Motion Capture System, Orbec Astra RGBD Sensor

GROUP 3

Description

Following the principle of a scavenger hunt, Group 3 has to detect QR Codes, use the encoded information to navigate inside the environment and finally find the victim.

Sensors Involved

Optitrack Motion Capture System, Orbec Astra RGBD Sensor

Projects Of Winter Term 2016/2017

"Don’t Throw Things At Drones!" (Rafael Weilharter)

Description

When throwing a reflective marker towards the drone (parabolic trajectory), the drone should avoid it if a hit is imminent.

Sensors Involved

Optitrack Motion Capture System

"Optitrack & RGBD-Sensor Based Indoor Mapping" (Christoph Leitner)

Description

Pose measurements of Optitrack and RGBD-sensor data from the flying drone are used to map the indoor flying space. Based on the depth data, an Octomap 3D occupancy grid for visualization is generated onboard.

Sensors Involved

Optitrack Motion Capture System, Orbec Astra RGBD Sensor

"Hand-Gesture Based Drone Control" (Alessandro Luppi)

Description

A monocular vision sensor is used to recognize basic hand gesture inputs to give commands to a micro aerial vehicle.

Sensors Involved

Optitrack Motion Capture System, external Monocular Vision RGB Camera

"Visual Marker Following Drone" (Victor Palos)

Description

The onboard monocular vision camera is used to track and follow a visual AR marker in 4DOF.

Sensors Involved

Optitrack Motion Capture System, onboard Monocluar Vision RGB Camera

"Hula Hoop Following Drone" (Fabian Golser)

Description

The onboard monocular RGB camera of the drone is used to recognize a target (hula-hoop) and estimate its position. After targeting, the drone then should be able to pass through it.

Sensors Involved

Optitrack Motion Capture System, onboard Monocluar Vision RGB Camera

"ORB2 SLAM Based Indoor Reconstruction" (Michael Stocker)

Description

The ORB2 SLAM framework should be tested for use with onboard sensors of the drone to localize itself and create an Octomap based 3D occupancy grid for visualization.

Sensors Involved

Optitrack Motion Capture System, Orbec Astra RGBD Sensor

"Snapdragon Flight Based Object Recognition And Waypoint Following" (Markus Schratter)

Description

The second onbaord XU4 single board computer of the drone platform should be complemented or even replaced by the more compact Snapdragon Flight board which includes a vision camera already. Further, based on the images of this camera, the drone should autonomously detect visual objects (patterns) during flight and follow a path depending on them.

Sensors Involved

Optitrack Motion Capture System, Snapdragon Flight Board

STUDENT PROJECTS

Projects Of Winter Term 2016/2017

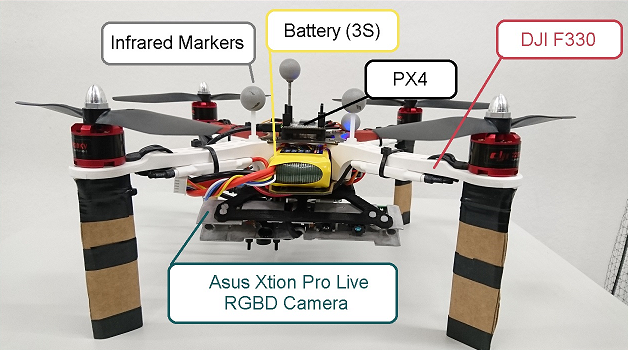

"3D-Object Reconstruction Using A Camera Drone With RGBD-Sensor"

Bachelor Thesis, Winter Term 2016, Student: David Fröhlich

Based on the F330 MAV platform of the Micro Aerial Projector a RGBD-Sensor equipped reconstruction drone was designed to semi-autonomously reconstruct 3D objects.

RESEARCH PROJECTS

The following research projects are/were involving the droneSpace for their experiments:

- UFO - User's Flying Organizer (2015-2018)

- VMAV Project (2015)

...

Student 1

Also checkout our new videos from the last terms lecture, which you can find here!

Also checkout our new videos from the last terms lecture, which you can find here!

Also checkout our new videos from the last terms lecture, which you can find here!

Also checkout our new videos from the last terms lecture, which you can find here!