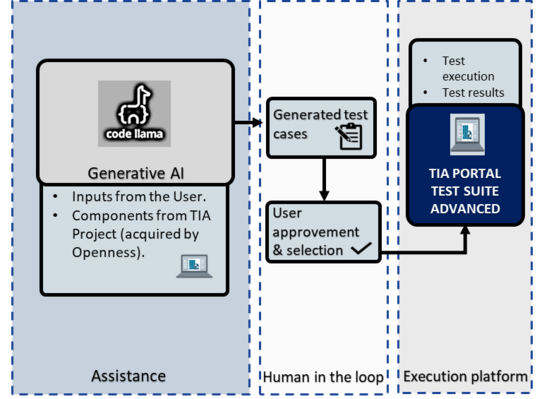

The testing of PLC code is crucial for ensuring the safety and reliability of PLC applications. While testing platforms have existed for many years, the generation of tests is often conducted manually. The objective of this project is to examine the potential of Large Language Models (LLMs) in the development of automated test cases. The student will initially concentrate on an understanding of LLMs, subsequently focusing on their fine-tuning. Following this, they will engage with TIA Portal and Siemens PLCs to create basic applications, generate tests using the developed LLM, and conduct these tests using the Test Suite add-on. The generated tests will then be evaluated for their correctness.

Download as PDF

Figure 1: Integration of the Automated Testing Assistant into the SIMENS TIA Portal tooling landscape

Thesis Type:

- Master Thesis / BSc Thesis / Seminar-Project

Goal and Tasks:

- Development of TIA Portal sample projects including safety blocks;

- Creation of Test Suite code to run safety tests of created projects;

- Augmentation and diversification of a pre-made dataset based on rephrasing;

- Fine-tuning Code Llama s7b through Parameter Efficient Fine-tuning;

- Utilising standard metrics to test the performance of the model;

- Running generated code on Test Suite for quality assurance.

Recommended Prior Knowledge:

- Basic programming skills, such as Python, C, or C++;

- Experience with TIA Portal or Ladder Logic in general is preferred but not obligatory;

- Previous experience or interest in functional safety and automation.

Start:

Contact: