Author Information

The conference will be one single track without poster session. All accepted papers are going to be presented by the authors in the form of an oral presentation (20 min talk + 5 min discussion). Please, try to stay wihtin your timeframe since we have a very tight schedule. A PC is available at the conference location but you are free to use your own computer. If you want to use the available PC, please transfer your presentation to the PC during the break before your session. Similarly, if you use your own computer, please make sure that your device works with the projector during the break before your session.

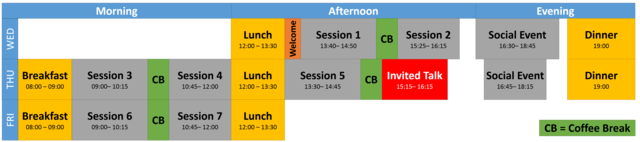

Conference Program

Registration at the conference venue opens at February 6th, 11:15.

Download the full conference program.

Wednesday, 06.02.2019

| 12:00-13:25 | Lunch |

| 13:30- 13:40 | Welcome Speech by the Organizers |

| 13:40-14:50 | Session 1 – Computer Vision in the Wild |

| 13:40-14:05 | Leveraging Outdoor Webcams for Local Descriptor Learning Milan Pultar, Dmytro Mishkin, Jiri Matas |

| 14:05-14:30 | Image Retrieval under Varying Illumination Conditions Tomáš Jeníček, Ondrej Chum |

| 14:30-14:55 | Quantitative Affine Feature Detector Comparison Based on Real-World Images Taken by a Quadcopter Zoltán Pusztai, Levente Hajder |

|

|

|

| 14:55-15:25 | Coffee Break |

| 15:25-16:15 | Session 2 – Beyond Computer Vision |

| 15:25-15:50 | Counting slope regions in the surface graphs Darshan Batavia, Rocio Gonzalez-Diaz, Walter G. Kropatsch, Rocio Moreno Casablanca |

| 15:50-16:15 | Geometric Projection Parameter Consensus: Joint 3D Pose and Focal Length Estimation in the Wild Alexander Grabner, Peter M. Roth, Vincent Lepetit |

|

|

|

| 16:15-16:30 | Preparation for Social Event |

| 16:30-18:45 | Social Event – Sub Terra Vorau, Guided tour through subterranean pathways |

| 19:00 | Dinner |

Thursday, 07.02.2019

| 08:00-09:00 | Breakfast |

| 09:00-10:15 | Session 3 – Benchmarks and Datasets |

| 09:00-09:25 | An Unbiased Look at Face Hallucination Klemen Grm, Martin Pernuš, Leo Cluzel, Simon Dobrisek, Vitomir Struc |

| 09:25-09:50 | SyDD: Synthetic Depth Data Randomization for Object Detection using Domain-Relevant Background Stefan Thalhammer, Kiru Park, Timothy Patten, Markus Vincze, Walter G. Kropatsch |

| 09:50-10:15 | Benchmarking Semantic Segmentation Methods for Obstacle Detection on a Marine Environment Borja Bovcon, Matej Kristan |

|

|

|

| 10:15-10:45 | Coffee Break Group Photo |

| 10:45-12:00 | Session 4 – Space-Time Methods |

| 10:45-11:10 | Situation-Aware Pedestrian Trajectory Prediction with Spatio-Temporal Attention Model Sirin Haddad, Meiqing Wu, Wei He, Siew-Kei Lam |

| 11:10-11:35 | A Spatiotemporal Generative Adversarial Network to Generate Human Action Videos Stefan Ainetter, Axel Pinz |

| 11:35-12:00 | Combining Top-Down and Bottom-Up Processes to Extract Space-Time Volumes of Interest from Video Filip Ilic, Axel Pinz |

|

|

|

| 12:00-13:30 | Lunch |

| 13:30-14:45 | Session 5 – Geometric Vision |

| 13:30-13:55 | Robust Fitting of Geometric Primitives on LiDAR Data Tóth Tekla |

| 13:55-14:20 | MAGSAC: marginalizing sample consensus Dániel Baráth, Jiri Matas, Jana Noskova |

| 14:20-14:45 | Planar Motion from a Single Affine Correspondence Levente Hajder, Dániel Baráth |

|

|

|

| 14:45-15:15 | Coffee Break |

| 15:15-16:15 | Invited Talk Self-supervision for 3D Shape and Appearance modeling |

|

| Gabriel Brostow – University College London |

|

|

|

| 16:45-18:15 | Social Event - Cider Tasting/Mostverkostung |

| 19:00 | Dinner |

Friday, 08.02.2019

| 08:00-09:00 | Breakfast |

| 09:00-10:45 | Session 6 – Visual Learning |

| 09:00-09:25 | Improving CNN classifiers by estimating test-time priors Milan Sulc, Jiri Matas |

| 09:25-09:50 | The Human is Always Right: The Cognitive Relevance Transform Gregor Koporec, Andrej Košir, Aleš Leonardis, Janez Perš |

| 09:50-10:15 | Deep Learning for Surface-Defect Detection Domen Tabernik, Samo Šela, Jure Skvarč, Danijel Skocaj |

|

|

|

| 10:15-10:45 | Coffee Break |

| 10:45-12:00 | Session 7 – Object Detection and Pose Estimation |

| 10:45-11:10 | Pulling on socks by a force-compliant robot Megumi Miyashita, Vladimír Kubelka, Vaclav Hlavac |

| 11:10-11:35 | Viktor Kocur |

| 11:35-12:00 | Object Tracking by Reconstruction with View-Specific Discriminative Correlation Filters Ugur Kart, Alan Lukezic, Matej Kristan, Joni-Kristian Kamarainen, Jiri Matas |

|

|

|

| 12:00-12:15 | Closing Ceremony and Awards |

| 12:15-13:30 | Lunch |

Invited Speaker

| Gabriel Brostow is a Professor of Machine Vision at University College London. His group specializes in Human-in-the-Loop computer vision, where the applications span different areas of vision applied for scientific exploration, and vision applied for computer graphics. He received his PhD from Georgia Tech, and has worked at Cambridge University and ETH Zurich, before starting his group at UCL. He is an Associate Editor for PAMI, was co-program chair for BMVC 2017, and is co-program chair for ECCV 2022. |

Self-supervision for 3D Shape and Appearance modeling.

A single glimpse is hardly enough to triangulate the 3D shapes of a scene. But many glimpses taken together, can give enough supervision to accomplish interesting tasks, such as depth from a single photo, volume from a single depth, and appearance of objects and scenes from novel viewing angles. In this talk, I will distill the main lessons we have learned recently, in attempting to a) design networks that understand "a bit" about 3D, and to b) train networks to predict depth, or volumes, or appearance, for several application domains. Some details matter, and the data itself is a key ingredient. There is still more exciting work to be done!

This talk will cover equivariance, consistency losses, and some personal views on diversity in predictions.